for taking the VCP 5 exam. Its purpose is basically to provide me a

setup to get better acquainted with certain vSphere features and maybe

testing of various fault / resolution scenarios. This post is to help me

retain the details of the resulting configuration. Since this is not an

end all / be all lab, if you have any suggestions, I'd like to hear them.

Additionally, all of the software detailed within (at time of posting) can

be downloaded and used either freely or for evaluation / testing purposes:

- vSphere software: these components can be downloaed from VMware.com and used fully featured for evaluation purposes for 60 days before requiring a license - Windows 2008 Server (for our vSphere client): it can be downloaded from Microsoft.com and used fully featured for 180 days for evaluation purposes - CentOS: can be downloaded from CentOS.org and freely used - FreeBSD: can be downloaded from FreeBSD.org and freely used - Solaris: can be downloaded from Oracle.com and freely used for testing purpeses (otherwise it requires an Oracle licensing contract) - bind 9.8.1-p1 (nameserver software): can be downloaded and freely used from isc.org - VirtualBox: can be downloaded from VirtualBox.org and freely usedIn designing my lab setup, I went scrounging around for possible ideas

and stumbled across Simon Gallagher's "vTARDIS". After taking some

pointers from Simon's setup and others, I came up with the following:

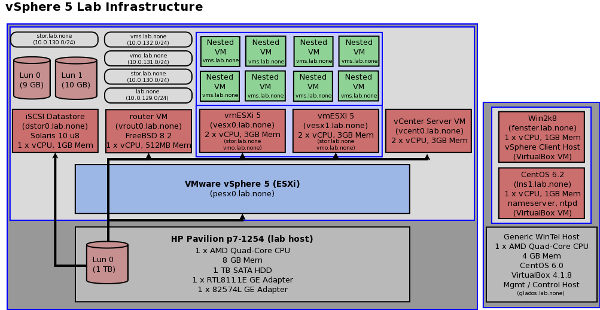

Basically, we end up 2 physical hosts ("management" and vSphere lab) with

2 layers of virtualization on the vSphere lab host. While all aspects

are functional for my needs, I wouldn't necessarily choose some things

for a production environment, such as FreeBSD for the router (though it

is capable of being one). I simply chose components with which I was

already familiar and could quickly implement, thus some substitutions

could be made. Additionally, all hosts and VMs are running 64 bit OSes.

Moving along, the setup is primarily contained on 1 standalone host

(the vSphere lab host) with VMware ESXi 5 directly installed (pesx0).

Following that, I created 5 VMs (1st virtualization layer) on the

physical ESXi host (pesx0) for handling of various vSphere resources

and infrastructure, thus the setup is:

- 4 configured networks: + 10.0.129.0/24 (lab.none. domain); management network + 10.0.130.0/24 (stor.lab.none. domain); storage network + 10.0.131.0/24 (vmo.lab.none. domain); vMotion network + 10.0.132.0/24 (vms.lab.none. domain); VM network - 1 x physical (bare metal) ESXi 5 installation (pesx0.lab.none.) + supports the overall lab infrastructure (1st layer of virtualization) + connected and IP'd on all networks + configured in vSphere inventory on vcent0 as a separate datacenter and host (solely for ease of management) + uses the local 1 TB disk as its datastore to provide storage for the 1st layer (infrastructure) VMs + assumes full allocation of physical system resources, thus 1 x AMD 2.2 GHz quad-core CPU, 8 GB of memory, 1 TB SATA HDD, 1 x RTL8111E GE NIC (unused), 1 x 82574L GE NIC - 1 x iSCSI datastore (dstor0.lab.none, dstor0.stor.lab.none) + infrastructure VM (1st layer virtualization) + provides the backing datastore for the non-infrastructure VMs, as in, those supported by the nested ESXi 5 hosts (vesx0, vesx1) + runs Solaris 10 u8 using ZFS volumes to provide the iSCSI target LUNs presented to both vesx0 and vesx1 + connected to 2 networks, the management network and the storage network + storage network interface configured with an MTU of 8106 (see note 0) + configured with 1 vCPU, 1 GB of memory + storage (thick provisioned, eager zeroed): -> disk0: 8 GB (system disk) -> disk1: 20 GB (storage disk backing both datastore LUNs (LUN0, 9 GB; LUN1, 10 GB) - 1 x router (vrout0.lab.none) + infrastructure VM (1st layer virtualization) + connected and IP'd on all networks + provides routing for all networks, though routing is only truly needed for the VM network (the others are simply for my own testing) + runs FreeBSD 8.2 + configured with 1 vCPU, 512 MB of memory + storage (thick provisioned, eager zeroed): -> disk0: 4 GB (system disk) - 2 x virtual ESXi 5 installations (vesx0.lab.none, vesx1.lab.none) + infrastructure VMs (1st layer virtualization) + connected and IP'd on all networks, though don't necessarily need to be IP'd on the VM network + storage network and vMotion network set to MTU of 8106 (see note 0) + support all nested VMs (2nd layer virtualization) + each configured with 2 vCPUs, 3 GB of memory + storage (thick provisioned, eager zeroed): -> disk0: 2 GB (system disk) - 1 x vCenter Server appliance (vcent0.lab.none) + infrastructure VM (1st layer virtualization) + connected and IP'd on the management network + originally deploys expecting 8 GB of memory for itself, I later tuned that down to 3 GB + additionally configured with 2 vCPUs + this host could have been deployed as a VM to either vesx0 or vesx1, however, I decided to keep it outside of the nested VMs and in the 1st virtualization layer; as an aside, the vCenter Server appliance is expected to run as a VM and not as a "standalone" host as I have effectively set it up as + storage (thin provisioned) -> disk0: 22 GB (system disk) -> disk1: 60 GB (vCenter storage / database)Within a normal environment, the infrastructure hosts (not including the

vCenter Server appliance) would all be physical hosts. (If vCenter Server

was installed as an application to a Windows host, then it could run on

a physical host as well.) Since I don't have that many hosts in my lab,

they are all VMs in the 1st layer of virtualization. Of note, I had

originally thin provisioned all of the infrastructure VMs above. Due to

performance issues, I inflated all infrastructure VMDK files (except

for those of the vCenter Server appliance (vcent0)). This resulted in

their related VMDKs being thick provisioned, eager zeroed. The issues

(I/O contention related) were mostly resolved as a result. In the 2nd

layer of virtualization, the nested VMs, most are configured only with

thin provisioned storage, minimal system resources, and no OS installed.

Some, however, do have either FreeBSD or CentOS installed for testing

of things such as virtual resource presentation, handling during vSphere

events (like vMotion), vSphere's "guest customizations" feature, and my

general curiosities.

In the above, I have somewhat over committed the available physical and

the presented virtual resources. This won't be a problem for simple

lab testing, however, it would never beat a performance benchmark.

To be clear, due to the over commitment of resources, placed under load

the above setup would see quite a bit of resource contention.

The second physical host contains 2 additional, critical components.

This host (glados.lab.none) functions as a workstation, but supplies

one additional infrastructure component (lns1) and a management

client (fenster). Additionally, this host already has an OS installed

(CentOS 6.0). Given its primary nature as a workstation, the additional

components are virtualized via Oracle's VirtualBox, which I already had

installed (I know, not VMware Workstation, etc.). The workstation is

connected via a crossover cable to the vSphere lab host's 82574L GE NIC.

The details for this host are:

- 1 x physical host running CentOS 6.0 (glados.lab.none) + connected and IP'd on the management network (10.0.129.0/24 (lab.none. domain)) + runs VirtualBox 4.1.8 supporting VMs lns1 and fenster -> VirtualBox bridges the 2 lab component VMs across the physical interface connected via crossover connection to the physical vSphere lab host - 1 x generic infrastructure host (lns1.lab.none) + connected and IP'd on the management network with routing configured to all lab networks + runs CentOS 6.2 + serves as a name server running bind 9.8.1-p1 supporting all lab domains: -> lab.none. -> stor.lab.none. -> vmo.lab.none. -> vms.lab.none. + serves as a network time server for all hosts and VMs + has the VMware vSphere CLI and perl SDK packages installed + configured with 1 vCPU and 1 GB of memory + storage (thin provisioned): -> disk0: 8 GB (system disk) - 1 x management host (fenster.lab.none) + connected and IP'd on the management network + runs Windows 2008 Server + has VMware vSphere client installed + configured with 1 vCPU and 1 GB of memory + storage (thin provisioned): -> disk0: 14 GB (system disk) -> disk0: 10 GB (data disk)The above completes the initial vSphere 5 lab infrastructure layout

accounting all necessary components. In following posts, I'll include

some of the main configuration details for the the various infrastructure

components. For instance, in part 2, the various configurations for

the second physical host (management host) and its VMs will be discussed.

NOTES

Note 0:

I originally wrote this with an MTU of 9000, however, have updated

the MTU to 8106 to reflect the actual configuration seen later.

The MTU of 8106 is due to the datastore's support of that MTU size.

see also:

vSphere 5 Lab Setup pt 2: The Management Hosts

vSphere 5 Lab Setup pt 3: Installing the Physical ESXi Host

vSphere 5 Lab Setup pt 4: Network Configuration on the Physical ESXi Host

vSphere 5 Lab Setup pt 5: Infrastructure VM Creation

vSphere 5 Lab Setup pt 6: Infrastructure VM Configurations and Boot Images

vSphere 5 Lab Setup pt 7: First VM Boot